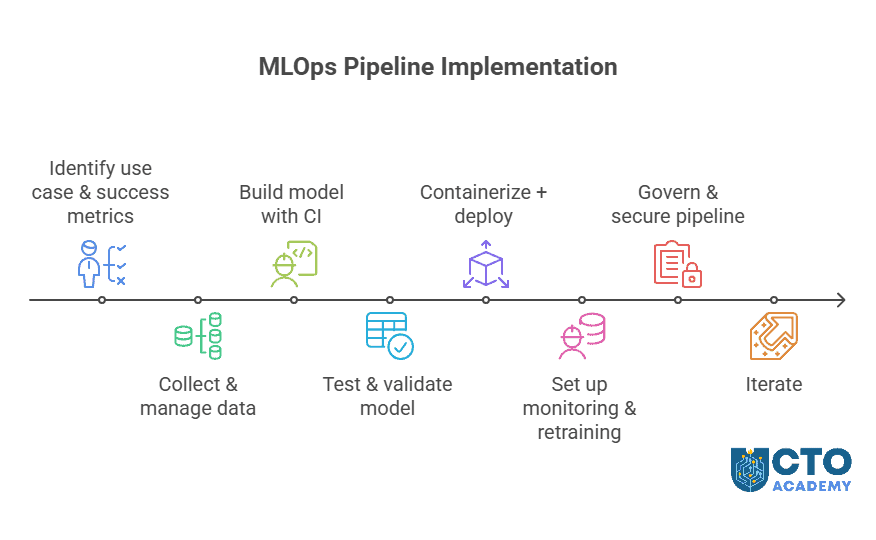

Operationalizing machine learning is no longer optional because AI initiatives have moved beyond prototypes. Tech leaders must, therefore, ensure scalability, maintainability, and compliance. This article provides a clear MLOps pipeline for production-level machine learning.

First, here’s a visual presentation of the process:

1. Identify Use Case and Success Metrics

- Clarify the business impact: fraud detection, churn prediction, or dynamic pricing.

- Define measurable KPIs, such as ROC-AUC or inference latency, and align stakeholders.

2. Collect and Manage Data

- Centralize version training data using platforms like DVC or Delta Lake.

- Automate ingestion and validation to ensure data quality across iterations.

3. Build Models with Continuous Integration

- Use CI/CD tools to train models automatically when data or code changes.

- Include automated unit tests, model evaluation, and logging to maintain reproducibility.

4. Validate and Test Models

- Run A/B tests or canary releases with shadow deployments.

- Ensure models perform within accepted tolerances

- Ensure that rollback mechanisms are in place.

5. Containerize and Deploy

- Use Docker to encapsulate models.

- Choose Kubernetes or serverless infrastructure for scalable deployment.

- Monitor resource usage and response time.

6. Monitor and Retrain Automatically

- Track data drift, concept drift, and model degradation.

- Implement automated triggers for retraining.

- Implement alerts to human reviewers when anomalies arise.

7. Ensure Governance and Security

- Audit model lineage and access controls.

- Enforce compliance with GDPR, HIPAA, or sectoral regulations.

- Document decisions and risk assessments.

By structuring your ML lifecycle with these MLOps principles, you reduce technical debt and increase your team’s velocity from research to production.